Whitepaper

Introduction to confidential computing

The broad definition of confidential computing refers to the protection of data during computation.

While currently it is common to protect data in transit (TLS) and in storage via encryption, protection of data during computation has only recently become viable.

There are two different approaches taken to achieve this, trusted execution environments and approaches based on mathematical methods that are completely trustless but are limited in application and have performance overhead.

Trusted execution environments

A trusted execution environment is any enviroment that can make guarantees around the correctness, and security of the computation it performes. However here we talk about the implmentations that have the smallest TCB(Trusted Computing Base).

All of these implmentations have the capability to prove what code is running in them and are able to get secrets delivered to them securely. Using a process called remote attestation, they prove to any external party the integrity of the code. They then exchange data via encrypted channels in and out of this trusted execution environment running trusted code.

Intel SGX

Intel introduced SGX(Software Guard Extensions) in 2015. These additional security related instructions that enable a process to protect itself from the operating system with protection provided by the hardware.

However applications need to be re-written to use these instructions which makes the barrier to entry high. However the open-source Gramine OS abstracts away the complexity and let's apps run in enclaves without modification.

There was a previous memory limit of 256 MB which has since been increased to 1 TB with the 3rd Generation Intel Xeon processors. Details: https://www.intel.com/content/www/us/en/support/articles/000059614/software/intel-security-products.html

The final remaining limitation that can't be overcome is the performance overhead of the security instructions.

AWS Nitro Enclaves

AWS released Nitro Enclaves in 2020. This technology allows an AWS virtual machine (EC2) to carve out a portion of it's CPU cores and memory into a secure enclave. It's TCB(Trusted Computing Base) is the hypervisor instead of the CPU when compared to Intel SGX. It doesn't have network access or disk access by design. It can only communicate with the host virtual machine via v-socket.

Network connectivity can be introduced by tunneling connections over v-socket and similarly the host VM's disk can be used by using a network file system that is client-side encrypted.

AMD SEV-SNP

AMD SEV(Secure Encrypted Virtualization) is the newest of the trusted execution environment tech that's available in production today. The iteration of SEV called SEV-SNP released in March 2021 was the first to support remote attestation that's necessary for confidential computing.

With this tech the the enclave is the entire VM. It has high chip level security like SGX while having performance similar to AWS Nitro Enclaves and has no limitation around network of disk. Programs run out of the box but the OS needs modifications to support this.

While SEV seems like best option, there are still some security issues that need to be resolved as mentioned here: https://www.techradar.com/news/exclusive-theres-a-problem-with-amd-epyc-processors-but-the-company-doesnt-want-to-know

Luckily this issue can be easily resolved with the help of trusted cloud providers.

Mathematical methods

Fully homomorphic encryption

Fully homomorphic encryption is the direct computation on encrypted data without decryption. First generation HE was about a trillion times slower than traditional CPUs taking around 30 minutes for a single multiplication. Second generation schemes brought the times down to seconds rather than minutes with orders of magnitude improvements. And the most modern schemes have brought this further down to the tens of milliseconds. Finally making it applicable for a wide set of applications.

Performance is no longer the main barrier to adoption. The problem is that a high level of optimization often with manual tuning is needed to achieve good performance.

For more details watch: https://www.youtube.com/watch?v=SGoHbXG5kgk

Even if all these issues are overcome, every party needs to participate in the FHE protocol for it to work. Given this, trusted execution environments are going to be useful until every single system integrates with optimal FHE.

Secure multiparty computation

Secure multiparty computation is similar to FHE in that it is based on mathematical techniques to preserve privacy. But it also has the additional requirement of distributing the work between multiple parties that don't collude to ensure privacy.

It has similar drawbacks and advantages to FHE.

How Verifiably uses confidential computing

At Verifiably we will use the best confidential computing technology for the task and are not fixed on any specific technology. Initially we're starting with AWS Nitro Enclaves as they offer the best mix of security, usability and performance for our current goals.

Trusted execution environments in the public cloud

We have a strong opinion on how trusted execution environments should be used. If the TCB is the physical CPU, then evidence of physical security to the CPU is a must. For example, we consider chips running in an untrusted physical environment unsafe.

See this TechRadar article: https://www.techradar.com/news/exclusive-theres-a-problem-with-amd-epyc-processors-but-the-company-doesnt-want-to-know

The ramifications of not taking physical security into account are shown and we suggest how this should be done properly.

That's why we will only run our workloads in secure cloud environments where this security can be proved.

The importance of verifiable builds

The most popular use of confidential computing is for a customer of the cloud to be able to verify that the workload they deployed is indeed the workload running in the cloud.

Trusted execution environments allow verification of deployed binaries (compiled code). This works well for that use case. Since the customer compiles the code and knows that it was produced from the source code they intended. They also have the option of signing the binary.

At Verifiably we see confidential computing as an enabler of new use cases, not just as a way to further secure our workloads in the cloud.

If one party wants to deploy a workload to a trusted execution environment and prove what code is running to a second party, they can only prove which binary is running.

This introduces another aspect to be proved: which code produced the binary.

To solve this problem we use AWS CodeBuild to have an immutable trail that proves a certain binary was produced from the specified code.

We have ongoing research to produce verifiable builds independent of a trusted build server, the results of which are promising and we look forward to sharing that progress towards the end of 2022.

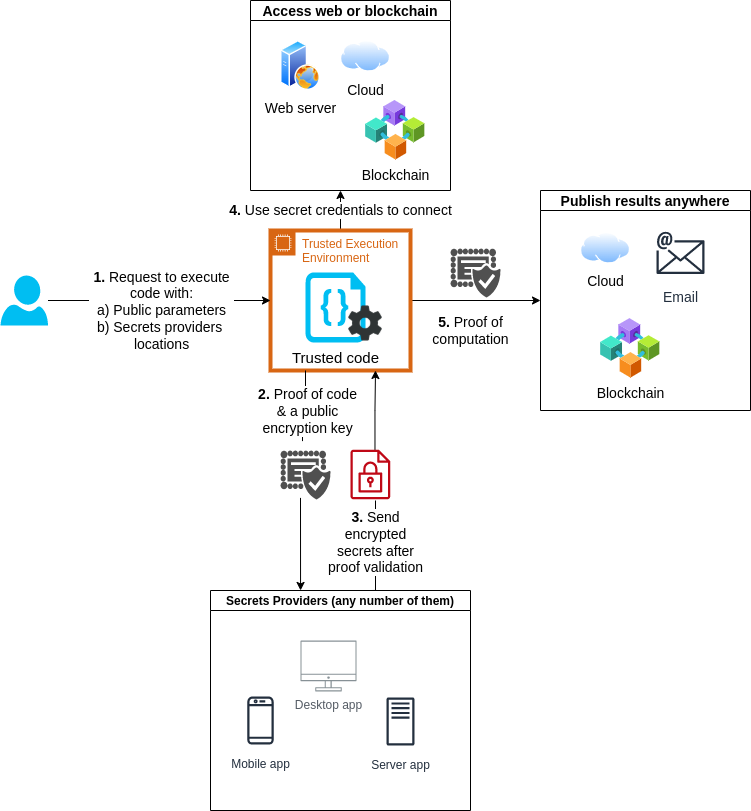

Data flow diagram

vFunction example walkthrough

Proving a bank account has a balance > x

- The bank account owner runs the secret provider app. In the app they configure it to only release their account credentials in the case that a trusted execution environment presents proof that it is running the code to connect to their account and check if the balance is > x and nothing else. They secret provider app has a unique id with which it can be contacted. The function is executed with this unique id as a parameter by calling Verifiably's API. Verifiably doesn't need to be trusted at any point in this process. For example if Verifiably passes a different id that required, the program will simply fail but the credentials can't be compromised.

- Verifiably runs the function on a trusted exectuion environment on behalf of the customer. If Verifiably submits the wrong function, it's ceritificate won't match that of the intended function and the credentials can't be obtained. If we submit the correct function it can generate the correct certificate signed by the AWS Nitro Enclave root key. Within the ceritificate is embedded a public key whose private key never leaves the secure enclave.

- The secret provider app validates the certificate and only releases the credentials if it matches the expected code. It also encrypts the credentials with the public key so that even if anyone were to intercept a valid certificate or the credentials, they would be useless except to the secure enclave running the correct code.

- The code decrypts the credentials with the corresponding private key and uses the credentials to make the API calls to the bank to check the balance. Since there's no interactive access to the enclave, no one can log into it and obtain any sensitive info from memory. The computation is provably private.

- Finally the produced output is signed with a private key that never leaves the enclave and a corresponding certificate is produced that proves that the correct code signed that output. This can be used as evidence of the bank account balance that can be verified offline with just the AWS Nitro Enclave root public key.

Other use-cases

This capability can replace web 3 oracles and also simulate zero knowledge proofs.

vData example walkthrough

vData works very similarly to vFunctions. Except instead of running a Python function, an Apache Spark job is run instead.

Credentials to data lakes are provided via a secrets provider app and released only if the Spark job is an approved job registered with the secrets provider.

Finally the produced output can be certified just like with vFunctions.

This enables safe monetization and collaboration on private data.